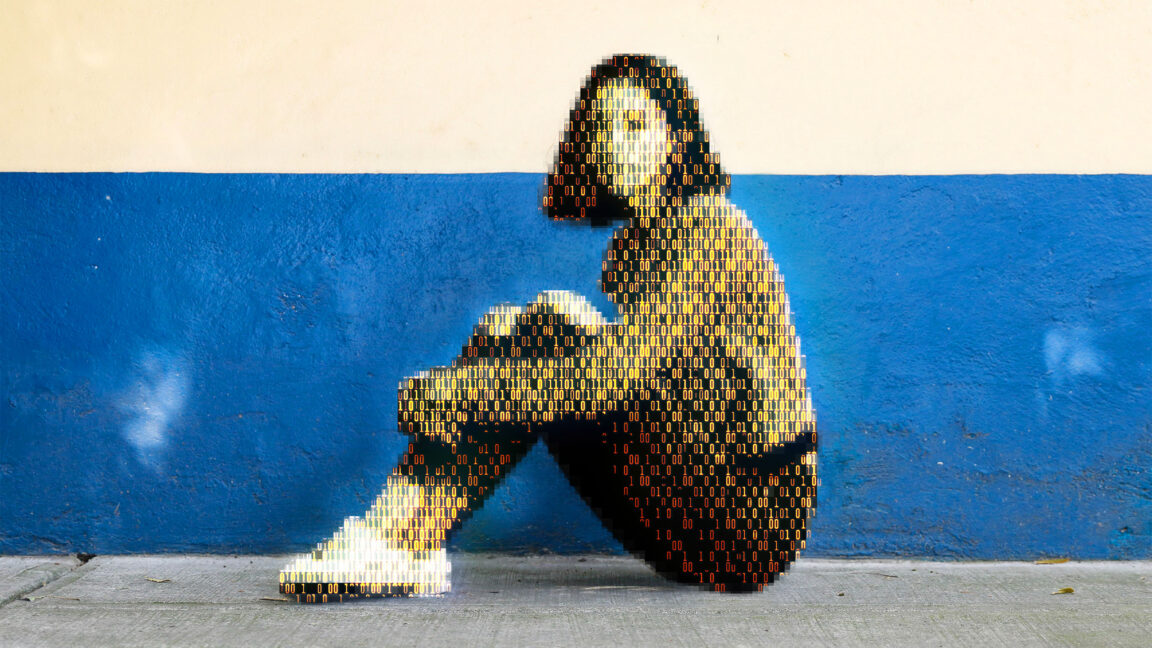

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an AI model designed to flag unknown CSAM at upload. It’s the earliest AI technology striving to expose unreported CSAM at scale.

There isn’t really much fundamental difference between an image detector and an image generator. The way image generators like stable diffusion work is essentially by generating a starting image that’s nothing but random static and telling the generator “find the cat that’s hidden in this noise.”

It’ll probably take a bit of work to rig this child porn detector up to generate images, but I could definitely imagine it happening. It’s going to make an already complicated philosophical debate even more complicated.