- 21 Posts

- 454 Comments

1·1 month ago

1·1 month agoThis is Microsoft enshittifying the platform they acquired to squeeze more revenue. But this is totally fine, because as user hostile and evil as the Microsoft corporation measurably is, they made a cute jpg few years ago about loving opensource or something (yeah, I know, those are different things, but I’m calling out their PR bullshit and the usual bootlickers)

102·2 months ago

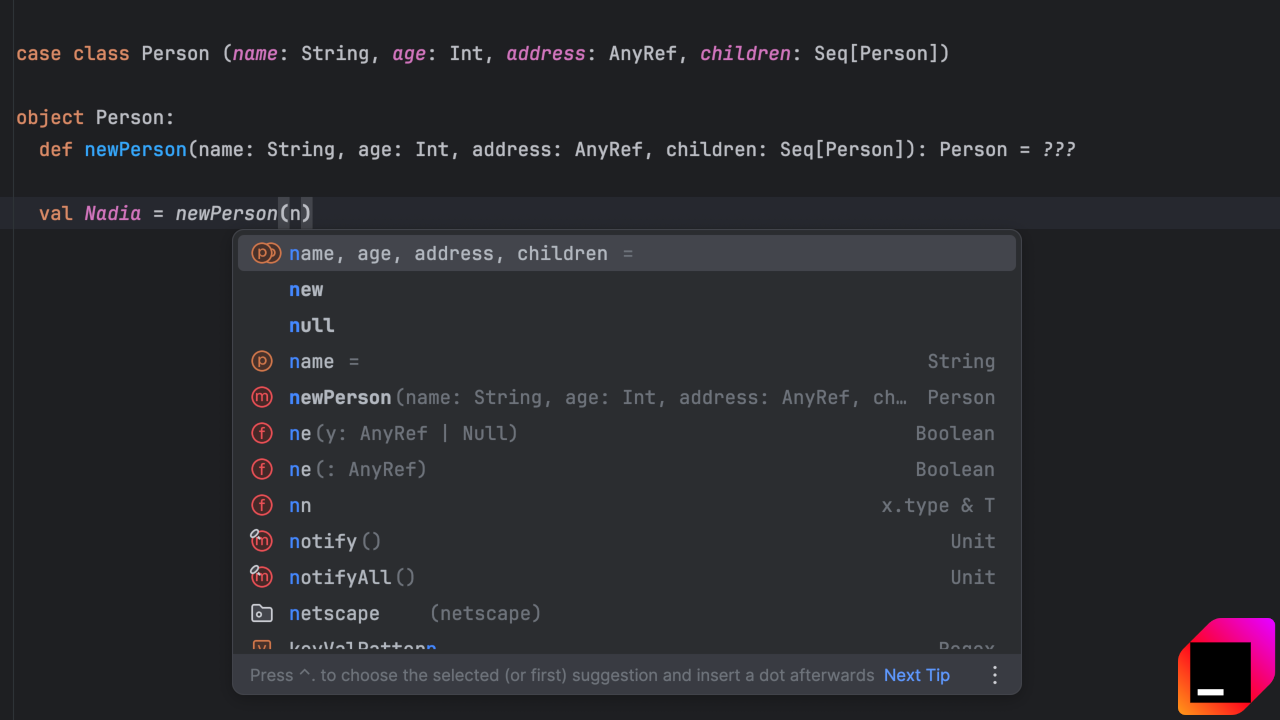

102·2 months agoI’ll bet people said the same thing when Intellisense started suggesting lines completions.

I’m sure many did, but I’m also pretty sure it’s easy to draw a line between code assistance and LLM-infused code generation.

351·2 months ago

351·2 months agoTelegram never was private, group chats never were encrypted (and that’s not an opinion: the feature simply is missing). If anything, they are just removing their false and deceiving claims. That they remained there for so long is something I can’t wrap my head around.

6·2 months ago

6·2 months agoI’d argue XMPP is less ideal than Matrix because groups are located on a single server, which makes them easier to take down than Matrix’ replicated state.

That is true, but it’s never been a problem in my relatively long experience with XMPP: some server software can be used as a cluster and distributed, making it highly available (basically, the whole of WhatsApp runs on a fork of ejabberd), and the comparatively tiny resource usage of XMPP contributes to its stability.

XMPP does have a spec for F-MUC (distributed rooms somewhat like Matrix, many years before Matrix) and my rationale as to why it never picked up despite a whole decade of “competition” from Matrix is that it’s a problem that just doesn’t need solving. The price to pay for it is hefty: Matrix resource usage (bandwidth, CPU, RAM) is insane, its protocol complexity makes it a single-vendor implementation (which is risky on very practical grounds), and it’s not even bulletproof for the niche use-case it set to tackle: in the end, your identity server on Matrix remains centralized.

You can tell that I’m partial to XMPP, but that’s only after having been a service operator for years, with my original expectations largely favouring Matrix.

1·2 months ago

1·2 months agoI think you should give Trilium(Next) Notes a try:

-

it has the hierarchical notes structure that you are familiar with in obsidian

-

it has better ways of keeping things organized (attributes can be values or references, can be shared and inherited, which provides a flexible framework for having notes “types” as templates that can be extended, e.g. people vs. colleagues, businesses vs. companies, etc)

-

it has the concept of note hoisting (which lets you focus on a note and its sub-notes, so other projects/spaces don’t come in the way of autocomplete and placing references), and workspaces that builds further on top of that

-

it can be used standalone (local client/offline-only, like obsidian) but coupling it with a remote-server opens more interesting use-cases (synching, sharing notes with others by public URLs, one-user/multi-client editing) which gives the best of both worlds (local-first/online-first) and lets you access your personal notes on devices you don’t necessarily own (which obsidian doesn’t). The mobile app story isn’t great (it’s a PWA with limited offline capabilities at the moment), but isn’t worse than the alternatives either (I can’t really work and think long form on a handheld, no matter the editor experience, but perhaps that’s just me).

-

1·2 months ago

1·2 months agoYou need to list out your requirements. What do you want to do? Where do you need your data? Do you care about open source? Self-hosting? Do you have an idea how your content will be organized? Will you ever need to tap into it as data? Etc

1·2 months ago

1·2 months agoHave you tried trilium notes? Not as hyped and polished, but does extraordinarily well IME.

1·2 months ago

1·2 months agoI didn’t like obsidian’s lacking in attributes structuring/typing and the fact that it cannot serve over a web UI (for wherever you cannot install the heavy client or just to share notes via URL), and found trilium notes to be doing that perfectly, and much much more. Highly recommend.

2·2 months ago

2·2 months agoYou can host (tens? of) thousands of XMPP sessions on a RPi at the back of your router or in a field hooked to a PV panel and sim card, and none of “the wealthy” knowing or caring about it, though. The difference with signal is that everyone can do that, and everyone doing it expands the network and makes it more resilient for the benefits of all.

1·2 months ago

1·2 months agoHow it works (to simplify) is them giving up on matrix clients ever becoming performant and well behaving on handheld devices (because of the absurd complexity of the protocol), and, instead of doing something about that, just decided to shift the client logic onto the server and castrating the clients (esp. for offline features). It’s also good short-term business because it makes hosting Matrix even more cumbersome and expensive, giving a compelling reason for the type of midscale/corporate deployments previously on the fence about their self-hosting costs (due to poor design and scalability) to just pay Element for that (while probably contemplating an alternative future).

11·2 months ago

11·2 months agoMatrix has the tendency to require all participants’s servers to replicate all of the room state (who joined when, who said what when, whose avatar changed to what when, …) practically forever, and is sucking a ton of bandwidth and CPU for the privilege. It’s pretty bad, unfixable, and, if you ask me, over hyped.

4·2 months ago

4·2 months agoSpeaking about XMPP, compared to centralized services, at least the “who talks to whom” and metadata concerns in general are partially mitigated by not having all the metadata converge towards a single host, being able to selfhost, and being able to host behind tor/i2p/…

1·2 months ago

1·2 months agoOther options for what exactly? Telegram practically has the same privacy and encryption guarantees as late 90’s forums and bulletin boards. If you want to learn nothing from that, keep using a centralized nonstandard service deprived of end-to-end encryption!

7·3 months ago

7·3 months agoIf you have the impression that there’s a dominant, homogeneous “mass” sharing the same opinion, you are right there in the middle of an information bubble and a victim of those “algorithms”.

6·3 months ago

6·3 months agoI’m not a cryptographer, and so I can’t really emit a judgement on the poster’s abilities or reputation, but what’s for sure is that this piece reads more like a bingo card of a person’s favourite “crypto stuff” and how partially it overlaps with some characteristics of OMEMO, rather than a thorough and substantiated cryptanalysis of the protocol and its flaws for real-world usages and threats.

Some snarky remarks remarks like

OMEMO doesn’t attempt to provide even the vaguest rationale for its design choices, and appears to approach cryptography protocol specification with a care-free attitude.

are needlessly opinionated, inflammatory and unhelpful, and tell more about the author and their lack of due diligence (in reaching-out to people and reading past public discussions) than build a story of what the problem is, why it matters, and how to remediate it.

Don’t get me wrong, I would love this piece to have been something else, and to reveal actual problems (which incidentally would have been a great boos to the author’s credibility and fame, considering that OMEMO underwent several audits and assessments in the recent history, including by several state agencies in the German and French governments…), but here we are, with one more strongly opinionated piece of whatever on the internet, and no meat in it to make the world a better place.

1·3 months ago

1·3 months agoMatrix seemed interesting right until I got to self hosting it. Then, getting to know it from up close, and the absolute trainwreck that the protocol is, made me love XMPP. Matrix has no excuse for being so messy and fragile at this point. You do you, but I decided that it isn’t worth my sysadmin time (especially when something like ejabberd is practically fire and forget).

1·4 months ago

1·4 months agoI don’t think our views are so incompatible, I just think there are two conflictual paradigms supporting a false dichotomy: one that’s prevalent in the business world where “cost of labour shrinks cost of hardware” and where it’s acceptable to trade some (= a lot of) efficiency for convenience/saving manhours. But this is the “self-hosted” community, where people are running things on their own hardware, often in their own house, paying the high price of inefficiency very directly (electricity costs, less living space, more heat/noise, etc).

And docker is absolutely fine and relevant in this space, but only when “done right”, i.e. when containers are not just spun up as isolated black boxes, but carefully organized as to avoid overlapping services and resources wastage, in which case managing containers ends-up requiring more effort, not less.

But this is absolutely not what you suggest. What you suggest would have a much greater wastage impact than “few percent of cpu usage or a little bit of ram”, because essentially you propose for every container to ship its own web server, application server, database, etc… We are no longer talking “few percent” of overhead of the container stack, we are talking “whole new machines” software and compute requirements.

So, in short, I don’t think there’s a very large overlap between the business world throwing money at their problems and the self-hosting community, and so the behaviours are different (there’s more than one way to use containers, and my observation is that it goes very differently in either). I’m also not hostile to containers in general, but they cannot be recommended in good faith to self-hosters as a solution that is both efficient and convenient (you must pick one).

1·4 months ago

1·4 months agoHow does that compare to wallabag?

1·4 months ago

1·4 months agoI don’t care […] because it’s in the container or stack and doesn’t impact anything else running on the system.

This is obviously not how any of this works: down the line those stacks will very much add-up and compete against each other for CPU/memory/IO/…. That’s inherent to the physical nature of the hardware, its architecture and the finiteness of its resources. And here come the balancing act, it’s just unavoidable.

You may not notice it as the result of having too much hardware thrown at it, I wouldn’t exactly call this a winning strategy long term, and especially not in the context of self-hosting where you directly foot the bill.

Moreover, those server components which you are needlessly multiplying (web servers, databases, application runtimes, …) have spent decades optimizing for resource pooling (with shared buffers, caching, event scheduling, …). These efforts are all thrown away when run for a single client/container further lowering (and quite drastically at that) the headroom for optimization and scaling.

Isn’t that the essence of the issue, that those models are loaded with biases, that might or might not overlap with dominant ones in inscrutable ways, hence producing new levels of confusion and indirection?