Their charter: https://openai.com/charter

OpenAI is the company behind ChatGPT among other AI products. I try to keep myself out of the loop when it comes to AI because I end up hearing about it anyway so I wasn’t aware of this charter.

For the unaware, AGI stands for Artificial General Intelligence. It basically means a form of AI that is extremely advanced and general-purpose like human intelligence is. For contrast, ChatGPT and Stable Diffusion (for example) are highly specialised. The former generates text response to text input and the latter generates images in response to text input.

Despite both these AI technologies of today being very impressive (even if their proprietors try to obscure the training and energy cost), the path to achieving AGI is pretty much inconceivable at present. Current AI technologies may have exploratory value in achieving AGI in some far future. But AGI is most likely not going to be built upon currently existing technologies and is going to be a different beast altogether provided it exists in the first place.

Given this, I find it absolutely baffling that OpenAI is talking about AGI like they do. This is the same level of delusion as Elon Musk talking about Mars colonisation. But given that techbros see themselves as the stewards for the next step in civilisational evolution, I guess it should come as no surprise that they eat this shit up uncritically.

I’m not sure what role these generative AIs will play in the near future. I am trying to figure out whether they will primarily be sold to corporations to cut labour cost or to end users to boost productivity. But talking of AGI and AI singularity and far fetched shit like that is a pure marketing stunt.

Does anybody have resources on AGI being a real possibilities beyond just a marketing term and, one day, just a mashup of various different things of AI?

I haven’t read anything about AGI that isn’t a “tech bro” kind of approach. Also, I don’t see how AGI is anything more than a marketing term where, once enough shitty jobs are replaced by it, they’ll hail it a success and that’s pretty much it.

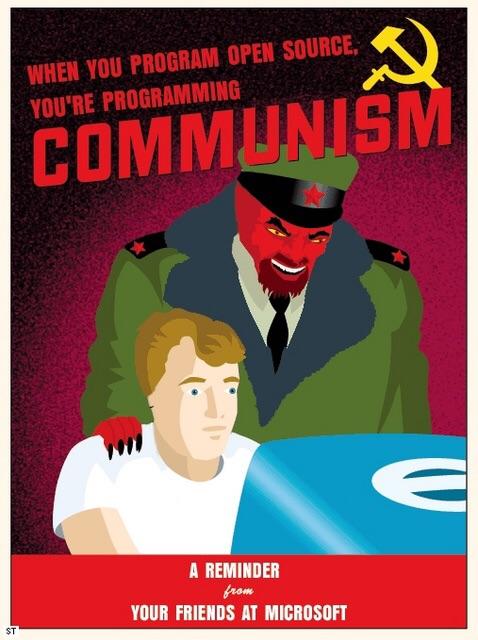

I want an AI, for example, to analyze the material conditions of a country and plan a Communist revolution for me. Can I have that? Will capitalism produce this for me?

Pls China, give me AI omniscient Lenin.

I want an AI, for example, to analyze the material conditions of a country and plan a Communist revolution for me. Can I have that? Will capitalism produce this for me?

Unironically, I think what’s more likely to happen is that once the compute resources become affordable to the average working class people then someone will inevitably start a FOSS project like that. I have the feeling even if some private company manages to make an AI that could be used for things like planning revolution they will probably train it in ways to make it incapable of doing these things.

I’m pretty sure this is just a hype/marketing thing for investors and laypeople. They’ve had it there since the very beginning (and always pretended it was a feasible goal), but haven’t made any strides in that direction so far.

It’s kinda like if some company CEO put forth some mission statement for his company to “use Mars colonisation to the benefit of all” then spend the following 20 years making cars and buying social media. Hypebros will look at that charter and embody that hexbear emoji of the guys pointing at a thing. IMO they’re a net negative for the serious Machine-Learning research scene.

I knew about this and it fits the high-on-their-own-supply sheer fucking hubris of the techbro world, as well as their reductionist dehumanizing attitude toward living human beings if they think LLMs match all the features of intelligent life or somehow surpass it.

deleted by creator

I wouldn’t even call it half of an AGI. AGI is comparable to a CPU; It’s a general purpose machine that is designed to run general purpose programs. But LLMs, Txt2Img Models, Img2Img Models, etc. are like ASICs: They’re specifically designed to do exactly one thing and do it very well. Claiming that any of the current AI technology is anywhere near general purpose is laughable. It’s nothing more than an attempt to build hype, to attract investors and gain market share.

deleted by creator

deleted by creator

It’s nothing like an AGI. It literally does one thing.

The problem with current AI models is the training time / energy use.

As computers become more powerful and specialist hardware starts to mature training on the fly will become a thing and this will be a massive step towards AGI.

Our brain has different regions that all specialise in different things and I expect AGI will be similar. Things like ChatGPT could be laying the foundation for the speech centers.

Moore’s law (and apparently now Moore himself lol) has been dead for a while and cores can’t really get that much faster due to power dissipation. On the other hand there are some really harsh physical or theoretical limits to parallelisms (besides the points in that article, I’ve heard it’s incredibly slow to have memory busses for the same memory in more than 16 cores). I wouldn’t place any bet that we’re going to get any more computing power than what we have.

The solution (to most of computing, not just AI) right now is to roll up the sleeves and start writing actually efficient software because we can’t expect that the next generation of Intel processors will necessarily make our optimizations redundant anymore.

That includes developing (possibly slightly worse but) more compute-efficient ML models, but the big AI boys are allergic to that because they rely on funding or are directly owned by the biggest cloud server providers.

They are looking at analogue chips as a potential solution.

I think minting chips for that specific AI model rather than running on a generic chip will be a potential solution.

start writing actually efficient software

We should be writing efficient software regardless.

It could but it also could not. It’s too early to say and it’s too up in the air despite how impressive the technology is. That’s why I think it’s very arrogant on their part to talk like they are gonna be the ones to usher in AGI.

Every bit of research helps with these things.

ChatGPT is a pretty massive breakthrough on its own as well.