So this is weirder than it looks at a glance.

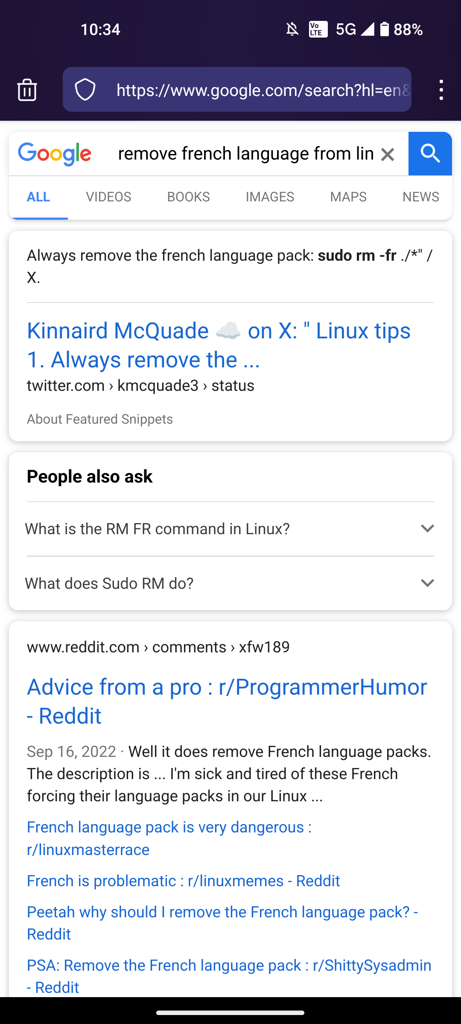

That is not an LLM-generated search result. That is a funny ha-ha mistake a LLM made that then some guy compiled in his blog about AI.

Google then did their usual content-stealing thing, which probably does involve some ML, but not in the viral ChatGPT way and made that card by quoting the blog quoting the LLM making the mistake. And then everybody quoted that because it’s weird and funny and it replicates all the viral paranoia about this stuff.

Is this how we beat the AI invasion? Data poisoning with memes and jokes?

Yes.

this deletes your OS right

It will try but unfortunately in the process of deleting your os the shell process of deleting will be affected and stop there.

However it can be savely assumed that you won’t be able to boot into it again and that your data is gone.

Isn’t the shell process loaded into RAM? In fact the entire session is, wouldn’t it be fine until you try to access a file somehow?

Deleting acesses the file, also background Services will refresh their ram at some point sth will break everything before you can delete it. Well maybe with an nvme and fast cpu you might be fast enough

Theoretically yes, but pretty much every modern Linux installation has some guards built in to the rm command to prevent it from deleting everything. Adding the flag --no-preserve-root removes this and gives you the classic DFE experience. (even without the flag though rm -rf / will still majorly fuck up your system.)

deleted by creator

Deletes literally everything it can. So yeah, the os would be affected

I mean, as long as you are ok with also nuking all search engines.

To be honest, text chatbots have done very little to move the needle one way or the other, and all search engines are barely usable right now, chatbots or no. I had some hopes for an AI implementation with speciific training on how to parse search results, but all we’re getting is the first couple of results read back to us.

So yeah, I get that people needed a new bad guy after crypto imploded, but it’s a shame that the discourse became what it is, in that it both fails to pay off on tech that is actually pretty cool when used right and it leaves a lot of old tech that is getting noticeably worse off the hook.

Just throwing in my 2 cents. I swapped to DDG from google about 2 weeks ago and I’ve been very impressed with the results. It feels literally impossible to find accurate results on google if there exists a product remotely similar to your search terms. The last straw for me was having an entire search page populated with similes of my search term. No “did you mean?” or “including results for…”, just straight up random fucken products that happened to have one word similar to what I was searching for.

I’m this close to paying for Kagi. DDG (or bing, really) drops one ball and I’m there. I’m so sick of fighting with search engines. The environment hasn’t changed, it’s just enshittification. And AI was never going to fix anything, it’s just a new and exciting enshittifier.

I bit the bullet on Kagi a few weeks ago. I love it. Am never going back. Worth every penny to me.

I have been on DDG for ages, but honestly it doesn’t solve the underlying SEO problems and I still find the searches lack depth.

As for Kagi… look, if I’m not willing to pay Google to remove ads from Youtube I’m not willing to pay for search just because it got crappy. I was here before search engines and I can live without them again if I have to. Or, you know, with slightly crappier ones if I have to. I survived AltaVista. I can do this again.

Lol “AI invasion”? If that’s what this is you “lost” over a decade ago. LLMs are a massive leap in NLP technology, but AI backs everything already.

John Connor was a shitposter all along.

How many pieces of media are only remembered for how bad they were?

what are the systoms of being pregarnt?

Dangerops prangent sex? Will it hurt baby top of its head?

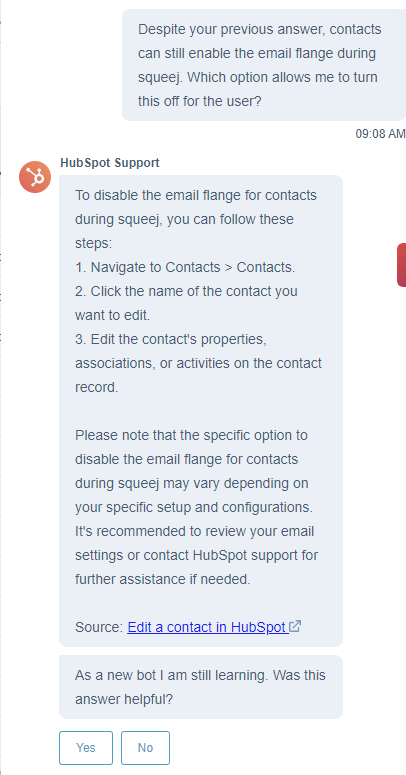

Hubspot AI chat bot told me to go three levels deep into a menu that doesn’t exist, to click a button that doesn’t exist to enable a service that doesn’t exist to solve a problem I had.

My company pays a 5-figure yearly sum for this service 👍

The way it was going, I thought you were going to solve a problem you didn’t have. Would be fitting.

maybe I should start asking it impossible questions

“how do I stop contacts from enabling the email flange during the squeej phase of marketing?”

edit: gottem lmao

This was incredible.

As a hubspot user this is actually not surprising at all. In fact the only surprise is that they’re not requiring you to buy more Professional seats for the answer.

Sound advice, but pro-tip: sometimes the email flange sticks and just needs oil. Salad dressing will work in a pinch.

Sounds a lot like when I Google how to do something in Powerpoint.

I got to review some AI customer service chats and never did I see it handle an issue from start to finish. It was pretty decent at setting the table for the human element. Unfortunately, the human element fails way too often but that’s for another team to solve, lol.

Is there a uBlock filter list for AI SEO websites? If not then I guess I should make one, it would make my life so much easier especially when looking for a product

I desperately need this. It’s gotten to the point where I don’t even consider search results from 2023 anymore.

Sign me right the fuck up

just saw one referenced yesterday on lemmy, I think. can’t find it now

For niche topics, search engine AI is less than worthless. It produces an unacceptably high proportion of misinformation.

Especially with the built in biases that the companies that use it build in. Try searching for anything that can even tangentially be defined as a product for sale and that is ALL your results are going to show.

The pronunciation of country names and their spelling is not niche.

This joke is really old now.

And yes, even if such mistakes are funny at first glance, it doesn’t change the fact that the field of AI has developed incredibly in the last year. And this development actually has the potential to completely change our economy. And not only that.

No, I’m not fun at parties.

deleted by creator

Okay but good goddamn your name IS fun.

deleted by creator

Don’t you dare socialize in the silent sipping corner!

Only sipping!

In silence!

…

…awkwardly…

I looked through your profile.

You seem like fun. Liar!

I mean it’s already impacted our economy, a lot of businesses have hemorrhaged money on it: https://siliconangle.com/2023/10/09/report-big-tech-firms-still-struggling-monetize-generative-ai-services/

I wanna see what happens as the inevitable market crash comes!

A lot more companies are making and saving quite a lot of money because of it

People downplaying the value of AI are like people in 1993 talking about how the Internet is just a playground for nerds.

Most companies are still piggybacking off of big tech because of the scale of LLMs. If big tech companies are having problems then everyone else will sooner or later. The more simple ones can probably be done on worse machines but not all and certainly not something on the scale of ChatGPT

They’re “piggybacking off of big tech” the way every company that uses PowerPoint does

I’m sorry man but this comment just laughs in the face of reality. The use of AI across the board is skyrocketing.

I no longer need a video team, or to outsource video content creation, because of a tool we get for $20/month. This is the tiniest fraction of the currebtly-deployed impact.

My last company implemented AI-run workforce planning, AI-enabled call monitoring that cut out QA team more than in half, and AI chat systems that freed up 80% of our chat team to transition to Live.

That’s 2 companies. There are hundreds of AI products out there. AI will be everywhere in less than a decade.

I no longer need a video team, or to outsource video content creation, because of a tool we get for $20/month

Not disproving the AI will kill too many jobs alegation.

I want AI to kill lots of jobs. We all should.

Are you running your PowerPoint directly off of Azure? You must be having the most greatest slideshow on earth then.

You must be having the most greatest slideshow on earth the

Your disingenuous post aside, I kind of do, yeah

Ah yes, the trend of every new technological development ever. Apparently investment is “hemorrhaged money” until it’s profitable.

And then it will only be profitable for a time until the people have no money to spend cuz no jobs.

The full automation of the economy won’t be for a century or more, and as manual labor dries up there will be new work in robotics technician fields and AI development and training.

I’m not saying we don’t need to prepare with taxation and UBI, but the jobs aren’t just going to disappear overnight, that’s ridiculous.

You didn’t even bother reading it. This isn’t counting investment, it’s straight up losing money right now, not counting investment. Microsoft’s customer model charges $10 a month/user to use it and it’s turned out to cost $30 a month/user. The other big firms are seeing similar costs.

These LLM require huge amounts of processing, then when your users are spending resources to do very simple tasks, which is basically all the models are useful for right now, it costs a stupid amount of money to do stupid things.

This is not to say that it cant be useful in the future, or smaller purpose built models can’t be useful. But these vast generic models literally hemorrhage money as it stands.

Some features are meant to drive engagement, not revenue. Some products are sold at a loss to drive engagement. This article is a very simplistic view of how technology has always worked for a product in it’s infancy.

also, no one said the AI that is going to replace everyone’s jobs and kill the economy because we don’t have a society or economical system that can survive that amount of job losses inside of it was going to be good, or accurate.

the goal of ai isn’t to be good or accurate, it’s to seem plausible.

The history of chatbots for support purposes show us that jobs will be replaced not when they can be done as good, but good enough, and what “enough” means is going to be a race to the bottom kind of situation over time.

Listen here, robot: Reminder that the Nothing forever hiccup just happened in February of this year. And struggles with POC facial recognition has been a source of discrimination still even now. You’re really trying to sell yourself as better than you are, AI. But you Can’t fool me.

Lemmy is the worst place to get your information on the field of AI or really tech in general lol. “Technology” is a bad word and the only upvoted posts are just false confirmation bias that tech corporations are in some sort if imaginary “death spiral”.

Dude for real. Even the “technology” community is just people shitting on everything from eVTOLs to AI, should be called c/luddites instead.

Most of lemmy doesn’t even understand how this shit works let alone has the knowledge to be an authority on it. There’s constant “oh this is going to make the company go under, stupid AI can’t possibly take our jobs!” Nonsense. Yet the cash keeps rolling in and AI becomes more and more integrated into every company.

Hell our cloud engineering team is currently building an in house model to assist data entry level workers with accessing the necessary data they need to do their jobs, and my team has used it to set up automated SFTP backups of our network gear.

It’s not going anywhere, and whenever someone says it’s useless because you can mislead it intentionally, or that it’s just a gimmick cause they “can’t see what it’s good for,” it’s a safe bet they’re just some gig economy worker who can’t conceive of a world outside their bubble.

Cash keeps rolling

That has been because of the liquidity. Now see what happens as liquidity dries up.

Ok let us know when that happens lmao

Kenya doesn’t start with K. It actually starts with K. Completely different.

Cenya

no Quenya. They even speak elvish

Quinoa. And it’s good for you. Eat it.

Ƙenya

One is unicode

deleted by creator

spelled with a “K” sound

I’m not even a linguist but reading this little snippet already makes me want to smash my head against a wall.

No matter how dumb AI is, it will be an improvement over a lot of people.

You could say the same about can openers or shelves, though 🤷

I’m not sure if you’re praising or damning can openers

Neither. Just pointing out that some people are less useful than even the simplest and most circumstantial technology 🤷

Fun fact: The metal can, and canning process were invented 80 years before the first can opener. Up until that point people opened them with whatever tools they had available, such as a knife, a hammer and screwdriver, or an axe.

Truly a harrowing time to be alive!

Lmao Fair!

And unlike humanity, AI will improve over time.

You have to define improvement with AI.

And given that AI doesn’t have observational awareness or ability to see it’s surroundings to compare fact from fiction so it takes direct input from users:it will adapt to what it is scraping.

And if you think it’ll just improve without human interaction for such an objective to be ‘improved’, perhaps you’re one of those people who think becoming a racist bigot spewing hate online where this will get scraped up by AI as an adaptation is ‘an improvement’

The nothing forever hiccup was a deep lesson on that.

Can I know about this “nothing forever” hiccup?

It is learning it’s facts from these people. So no.

Man, if you wanted me to cry you could have told me my childhood dog died a second time.

It’s always a race to the bottom to create the most content for the least amount of money and effort, isn’t it? The problem is, Ai generated content is crap.

“Water can be hot or cold. You shouldn’t drink too much of it. Water can be stored in containers so it won’t spill. You can cook things in it.”

Damn I’ve just been waiting for it to rain and standing outside with my mouth open. This container thing is a game changer!

People are being banned off the internet for misinformation and being replaced by AI bots who spout… misinformation.

Please quote me what this character said in this public domain story

Chatgpt: (Quotes wrong character)

No, that is a different character

Chatgpt: (invents hybrid character)

Sends it a link to the public domain text

Chatgpt: I can’t follow links

It’s like arguing with a MAGA

Of course there isn’t. And you know the word “gullible” isn’t in the dictionary, either.

And you know the word “gullible” isn’t in the dictionary, either.

Yeah it is… It’s right here… https://www.merriam-webster.com/dictionary/gullible

Here is an alternative Piped link(s):

https://www.merriam-webster.com/dictionary/gullible

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Oy bot that ain’t youtube its the dictionary.

Oy bot that ain’t youtube its the dictionary.

Did you click the link?

Oh shit. Didn’t see that coming.

Delightful

Why does it look so wrong?

Of course it’s on the ceiling.

Oh, so it doe-- ahh, you stole my lungs

Kenya believe it?

This is AI gaslighting .

Its fun when you ask it a question and you counter and it says “You’re right”.

Obviously it will never get better. Ha ha… Ha…

It will, but stuff doesn’t get better linearly forever. That’s why everybody in the 50s thought we’d be living in Mars, have starships and flying cars by now. Also why a bunch of investors and nerds thought AI was the new social media at some point.

Turns out most things get a lot better very fast and then a little better very slowly, and it’s very, very hard to know when that line is going to flip ahead of time.

I do want to point out that while our tech doesnt look as amazing in the same way as the 50’s thought it’d be, its pretty amazing in its own ways. I’m writing this message to you on a glass obelisk physically connected to nothing, that enables me to talk to my adopted family on the literal opposite end of the planet with maybe a few seconds of delay (if that), who dont speak the same language as me, and its more than 100,000 times more powerful than the computers thay first got us to the moon, while being small enough to comfortably fit in my pocket

You type posts that long on mobile? I am genuinely an old now.

Anyway, yeah, absolutely. We do live in the future. The point is that people extrapolate from whatever tech is in growth mode and inevitably go past where the real asymptote is. So yeah, if you were living in the 60s nuclear power and the space race seemed like amazing achievements, but it turns out the tech stopped shy of… you know, moonbases and the X-Men. If you were in the 80s automation and computers seemed like magic, but sentience didn’t emerge from sheer computation and… well, actually short of the cyborg part pretty much every other part of Robocop happened, so we’ll call that a tie.

So now we get affordable machine learning leading to working language and synthetic image models and assume that’s gonna grow forever until we get the holodeck and artificial general intelligence. And we may, but we could also hit the ceiling pretty close to where we are now.

Thats fair, and yeah I spend a lot of time on transit these days, so I have the time to write up long posts on mobile xD As another aside, VR Tech and Mocap tech mean that we do actually have modern reality adjusted versions of the Holodeck right now! Check out Sandbox VR, they have locations in multiple different countries and its super cool! I’m sure there are other companies that have done similar, Sandbox is just the one I know of and have used!

Captain obvious

The Jetson’s was supposed to take place 100 years from when it was being made in the 1960s.

Which means the following technologies beat their predictions by decades:

- Video calls

- Robot vacuums

- Tablet computing

- Smart watches

- Drones

- Pill cams

- Flat screen TVs

Flying cars exist, they just aren’t economically viable or practical given the cost, necessity to have a flight license, and aviation regulations regarding takeoff and landing.

And we’re still 40 years away from that show’s imagined future.

Your thesis focuses too much on the things here and there that were wrong, which typically related to expensive hardware cycles being assumed to be faster because the focus was only on the underlying technology being possible and not thinking through if it was practical (doors that slide into the ceiling is a classic example - the cost of retrofitting for that vs keeping doorknobs means the latter will be around for a very very long time).

What we are discussing is the rate of change for centrally run software which has already hit milestones ahead of expert expectations several times over in the past few years and set the world record for fastest growing new product usage beating the previous record holder by over a 4x speedup.

You’re comparing apples to oranges.

Yes. Technology went faster than people expected in some areas and much slower in others, to the point where the outcome may not be possible at all.

That IS my “thesis”. the idea that in the 1960s video calls and a sentient robot cleaning your house seemed equally cartoonishly futuristic is the entire point I’m making.

And to be clear, that holds even when restricted simply to consumer software and hardware. We got a lot better than expected at networking and data transmission… and now we’re noticeably slowing down. We are actually behind in terms of AI, but we’re way better at miniaturization.

Again, people extrapolate from their impression of current rates of progress endlessly, but it’s hard to predict when the curve will flatten out. That’s the thesis.

a sentient robot cleaning your house

Again - we’re still 40 years away from that envisioned future.

We got a lot better than expected at networking and data transmission… and now we’re noticeably slowing down.

The difference between the 2023 and 2022’s world records increased speeds for data transfer rates is nearly 5x more than the increase from 2020 to 2021.

As for your claims about being behind on AI, I’d strongly recommend looking at the various futurist predictions of what to expect from AI in 2020 from various firms, and how literally all of them completely missed the mark for the arrival of GPT-3.

Look at predictions for 2023 and you’ll see a lot of comments around a potential AI winter and how the data sources have been tapped. Meanwhile the major research advances over actual 2023 was basically “how is GPT-4 so good at all these things” and “it turns out using GPT-4 to generate synthetic data can train much smaller networks to be much much better than we could have achieved with previous data sets.”

And this is all in advance of the very promising work at a shift to new chip architectures for AI workloads, specifically optoelectronics which went from a pipe dream five years ago to proof of concept at MIT with DIY kits being made available for other researchers this year. So rather than hitting a plateau, if anything much like the gains in optical networking we’re heading towards more oil poured on the AI fire, not less.

Your thesis is great for things like colonizing Mars or living in spaceships, but it’s kind of crap for things like AI and software advancement.

I guarantee your Roomba will not walk around in a little maid uniform with a feather duster while balancing on a single wheel and making snarky comments in 40 years. I’ll bet any amount of money on that. Also no bubble flying cars or Rube Goldberg machines to wash your teeth. I don’t even know what point you’re trying to make at this point.

Transfer speed records are not what I meant. Admittedly I should have thrown the word storage in there, I thought I had. The idea is that while online infrastructure was exploding it outpaced the needs for storage and transmission, so we went through a very fast rise in specs. Google was out there giving people free storage left and right because their capacity was growing faster than the needs of users. Now every cloud service is monetized, including Gmail’s storage, because storage growth no longer outpaces storage demand. ISPs tapped out by the time Netflix was chugging a quarter of the bandwidth with 4K video and Youtube has been throttling resolution since the pandemic. Turns out, Google won’t be adding zeros to your available free storage forever.

As for AI, again, my entire point is that it’s not easy to know when it will flatten out growth. I’d argue it already has, at least in terms of consumer access. We keep getting incrementally better image generation, but a future where every image is created by a computer and every search is done via a chatbot interface does not seem to be materializing the way early knee-jerk reactions suggested.

Which is not to say that ML applications won’t be ubiquitous. There are tons of legit uses where the changes will be groundbreaking. But it being on a straight line that takes you to the holodeck? That line may bend down a lot sooner than that.

Maybe. We’ll see.